Last updated 03-26-2024

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

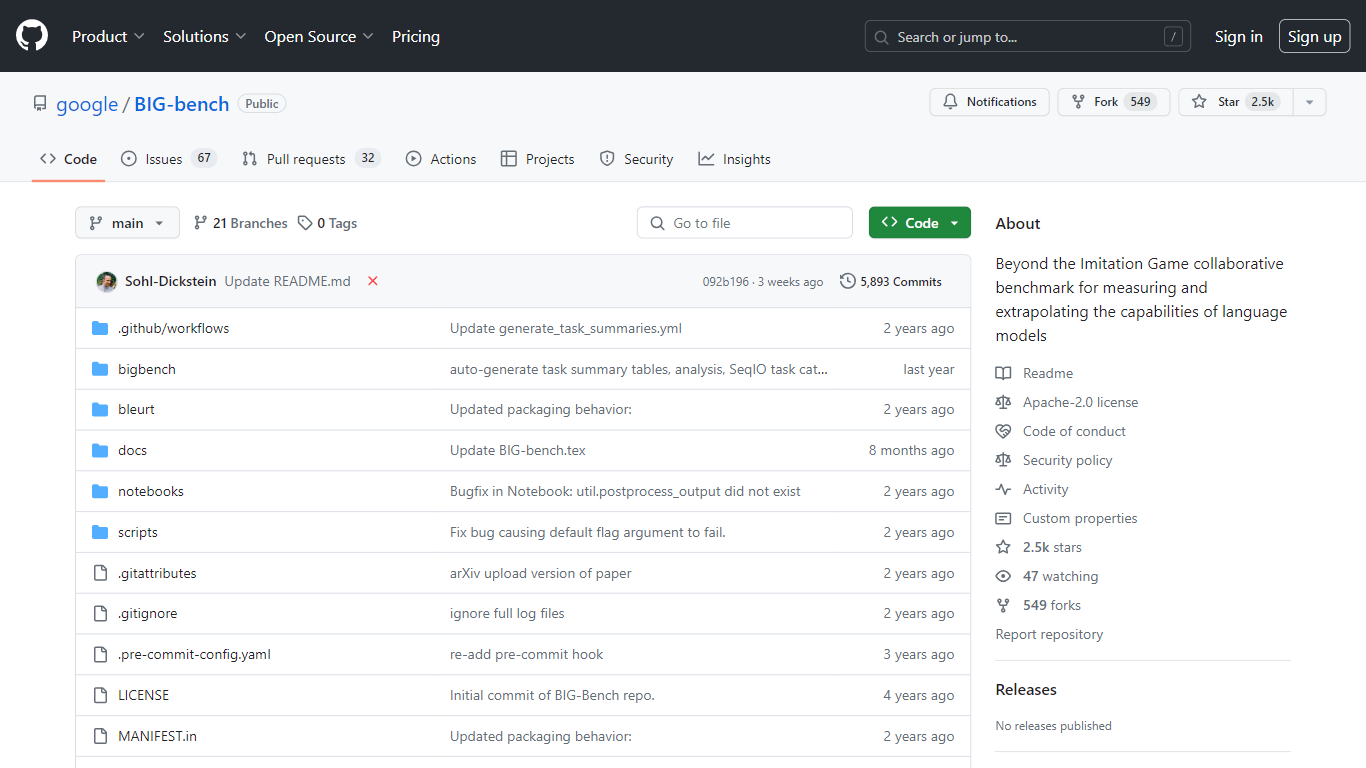

BIG-bench

The Google BIG-bench project, available on GitHub, provides a pioneering benchmark system named Beyond the Imitation Game (BIG-bench), dedicated to assessing and understanding the current and potential future capabilities of language models. BIG-bench is an open collaborative initiative that includes over 200 diverse tasks catering to various aspects of language understanding and cognitive abilities. The tasks are organized and can be explored by keyword or task name. A scientific preprint discussing the benchmark and its evaluation on prominent language models is publicly accessible for those interested. The benchmark serves as a vital resource for researchers and developers aiming to gauge the performance of language models and extrapolate their development trajectory. For further details on the benchmark, including instructions on task creation, model evaluation, and FAQs, one can refer to the project's extensive documentation available on the GitHub repository.

-

Collaborative Benchmarking: A wide range of tasks designed to challenge and measure language models.

-

Extensive Task Collection: More than 200 tasks available to comprehensively test various aspects of language models.

-

BIG-bench Lite Leaderboard: A trimmed-down version of the benchmark offering a canonical measure of model performance with reduced evaluation costs.

-

Open Source Contribution: Facilitates community contributions and improvements to the benchmark suite.

-

Comprehensive Documentation: Detailed guidance for task creation, model evaluation, and benchmark participation.

1) What is BIG-bench?

IG-bench, or Beyond the Imitation Game Benchmark, is a collaborative benchmark for measuring and extrapolating the capabilities of language models.

2) How many tasks are included in BIG-bench?

IG-bench includes more than 200 tasks to evaluate various aspects of language models.

3) What is the purpose of BIG-bench Lite?

IG-bench Lite is a subset of tasks from BIG-bench designed to provide a canonical measure of model performance while being more cost-effective for evaluation.

4) How can one contribute to BIG-bench?

ontributions can be made by adding new tasks, submitting model evaluations, or enhancing the existing benchmark suite through GitHub.

5) Where can I find the BIG-bench tasks and results?

he tasks and results can be found on the BIG-bench GitHub repository, with links to detailed instructions and leaderboards.

.